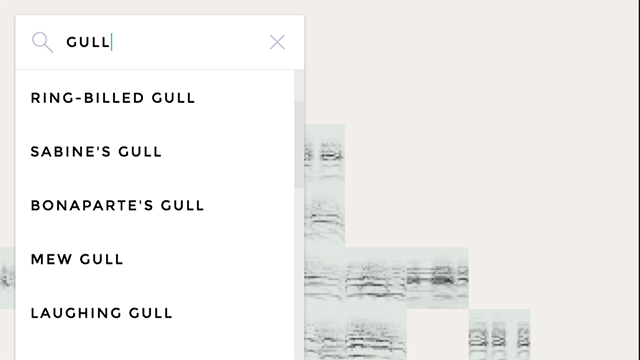

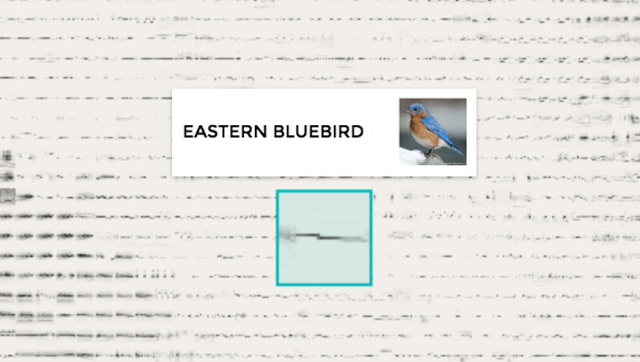

An Artificially Intelligent Database That Learns, Organizes and Visualizes Bird Sounds ‘By Ear’

Coders at the Google Creative Lab are working with the Cornell Lab of Ornithology to teach an experimental artificially intelligent (AI) database to learn, organize and visualize the incredibly varying sounds of different birds “by ear”, without any other information. Using data from the Cornell Guide to Bird Sounds: Essential Set for North America and a t-SNE process, the AI finds sounds that are similar to each other and maps them close together.

Bird sounds vary widely. This experiment uses machine learning to organize thousands of bird sounds. The computer wasn’t given tags or the birds’ names – only the audio. Using a technique called t-SNE, the computer created this map, where similar sounds are placed closer together. Built by Kyle McDonald, Manny Tan, Yotam Mann, and friends at Google Creative Lab.