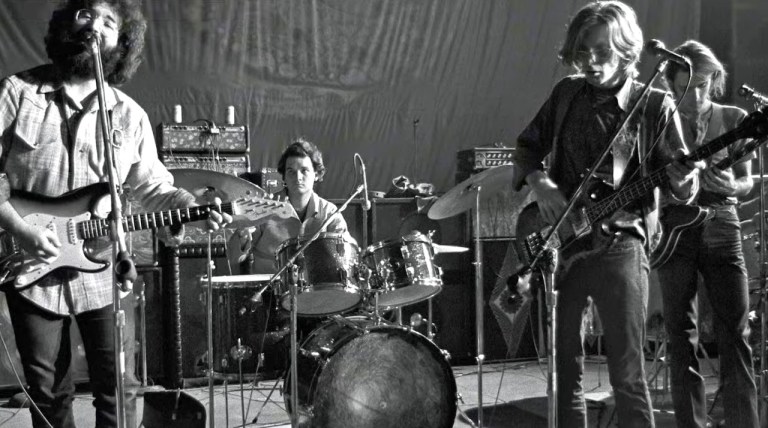

A Deep Learning Intelligent System That Isolates Individual Instruments From Music Videos

The MIT Computer Science and Artificial Intelligence Laboratory (“CSAIL) has figured out how to use deep learning intelligence to automatically isolate individual instruments from a piece of music videos. Called PixelPlayer, this smart system is self-regulated in its ability to identify the sounds of over 20 different instruments, although it can have difficulty differentiating different subclasses of the same instrument.

…a deep-learning system that can look at a video of a musical performance, and isolate the sounds of specific instruments and make them louder or softer. …PixelPlayer uses methods of “deep learning,” meaning that it finds patterns in data using so-called “neural networks” that have been trained on existing videos. Specifically, one neural network analyzes the visuals of the video, one analyzes the audio, and a third “synthesizer” associates specific pixels with specific soundwaves to separate the different sounds.