An Impressive Hands-On Demo of Gemini, A Fully Interactive Multimodal AI Model by Google

Google has launched Gemini, an incredibly interactive AI model that can reasonably answer questions that are asked using text, video, audio, images, and code from a wide variety of modern technologies. Demis Hassabis, the head of the Google Deepmind development team, stated that this was indeed a team effort.

Gemini is the result of large-scale collaborative efforts by teams across Google, including our colleagues at Google Research. It was built from the ground up to be multimodal, which means it can generalize and seamlessly understand, operate across and combine different types of information including text, code, audio, image and video.

Google released a demo video featuring impressive hands-on interactions with Gemini.

The Gemini model also set a record of sorts.

Gemini is the first model to outperform human experts on MMLU (Massive Multitask Language Understanding), one of the most popular methods to test the knowledge and problem solving abilities of AI models.

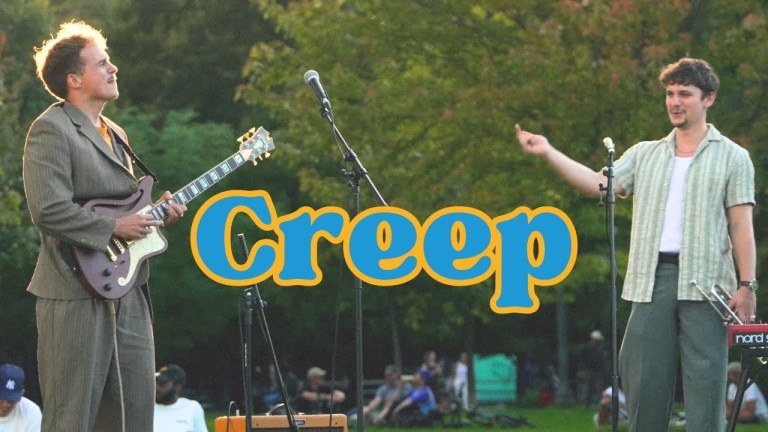

The team tested the system’s knowledge of films through words and visuals.

In this test, let’s see if Gemini can guess the name of a movie based on the play on words hidden in a set of images.

Entertaining science vlogger Mark Rober tested out the the Bard (chat) aspect of Gemini, to make the world’s most accurate paper airplane.

Witness a mind-blowing fusion of science and engineering as Mark Rober and Bard collaborate to craft a paper plane that’ll soar to uncharted territories of aerodynamics. Yes, if you’re wondering, Bard wrote this description.

The team also talks about the safety and responsibility involved in developing AI.

From its early stages of development through deployment into our products, Gemini has been developed with responsibility, safety and our AI Principles in mind.