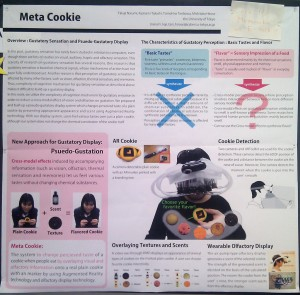

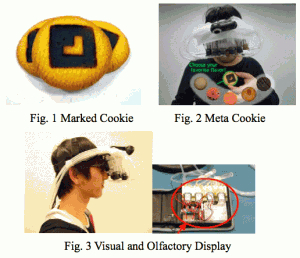

I’ve just returned from Siggraph 2010 in Los Angeles, where a poster presented by four researchers from the University of Tokyo breaks new ground in human/computer interaction. While most HCI research and development has been in display devices operating with visual (monitor), auditory (sound card), and less common tactile (braille,haptic) output, “Meta Cookie” describes an innovative “wearable olfactory display” employing “augmented reality technology and olfactory display technology”.

A menu allows the user to select which kind of virtual cookie they’d like to consume while holding a uniquely marked “augmented reality cookie” and wearing a special helmet. Cameras on the helmet track the cookie, with a heads up display overlaying the appearance of the desired cookie. The head/cookie tracking system triangulates the position of the cookie in 3D space, as the helmet’s aroma injectors produce the cookie’s simulated aroma and strength relative to its distance from the user’s nose. The system’s “palette” is capable of “displaying” chocolate, almond, tea, strawberry, orange, maple, lemon, and cheese cookies.